On May 26th, Facebook announced the launch of a program designed to curb the spread of misinformation on the platform, including false or misleading information about important issues including COVID-19, vaccination efforts, climate change and politics.

The program will expand Facebook’s fact-checking initiatives and introduce penalties against Pages and individual users who repeatedly share misinformation with their networks.

Following the launch of the program, users can expect to see a few changes within the platform.

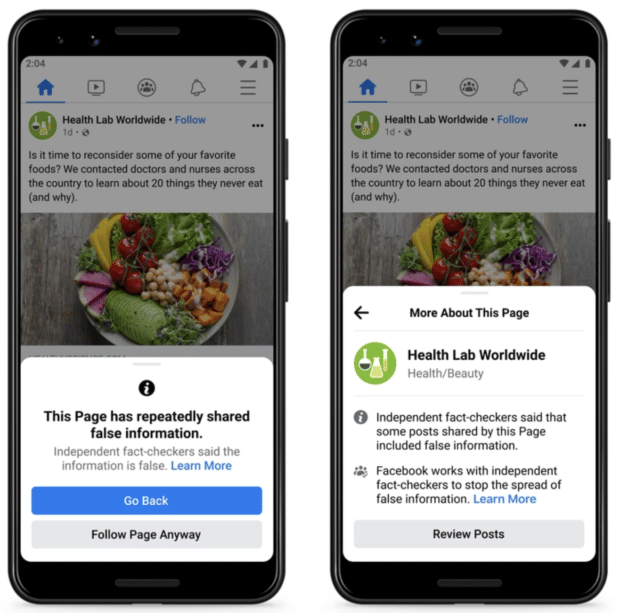

First of all, a warning pop-up will be displayed to users who try to like or follow a Page that has repeatedly shared content flagged by Facebook’s fact-checkers. The pop-up will explain why the Page has been flagged and give users the choice to move forward with the like or follow, or cancel the action. It will also offer more insight into the fact-checking program.

Source: Facebook

Facebook is also limiting the visibility of individual accounts that spread misinformation. If an account is flagged for repeatedly sharing false or misleading content, the Facebook algorithm will reduce their reach in their network’s news feeds.

Users who share misinformation will now also receive detailed notifications outlining the potential penalties for spreading false information as well as the reasons a specific piece of content has been flagged.

Continue the conversation on Reddit

Comments 0